Project Description

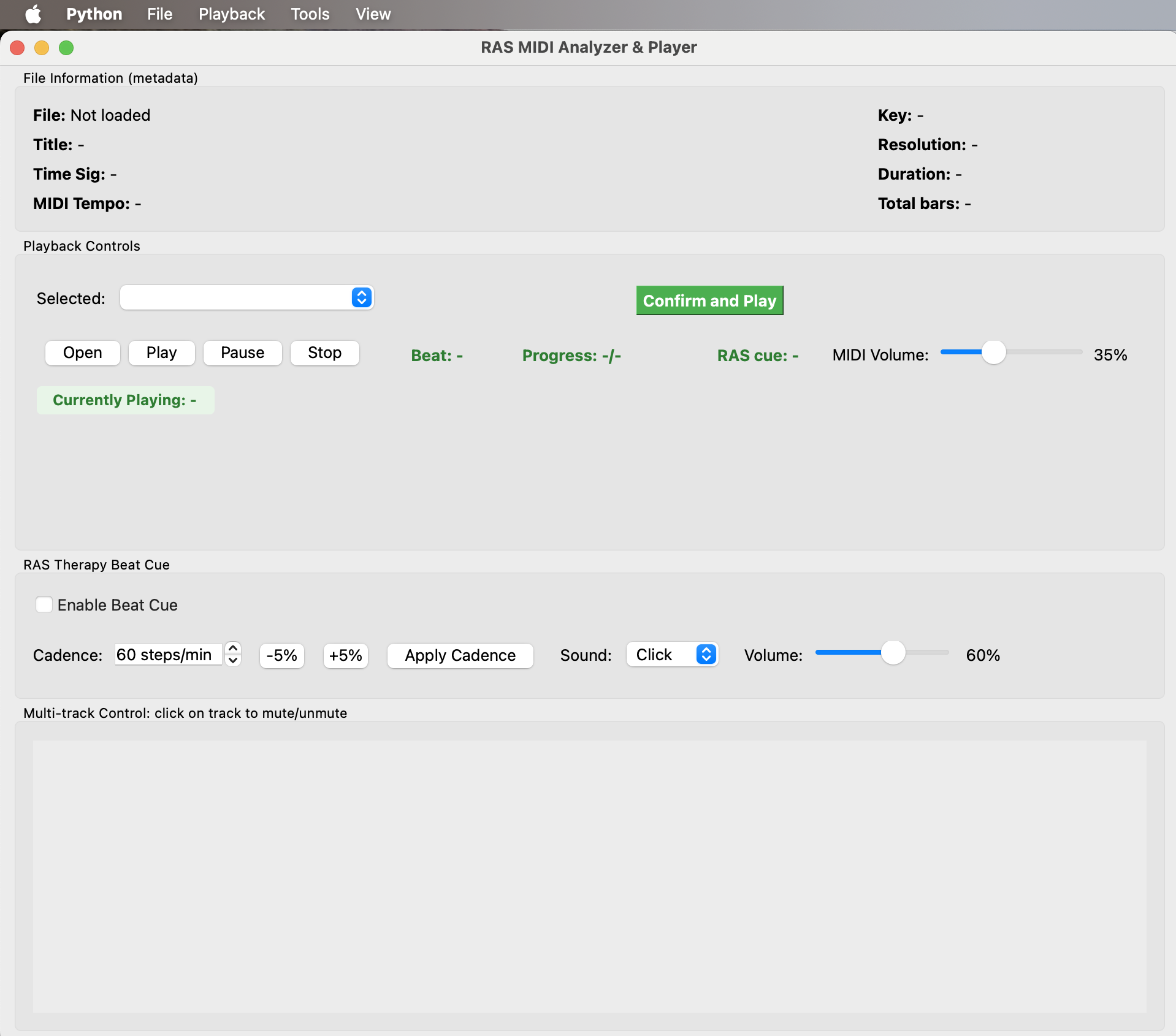

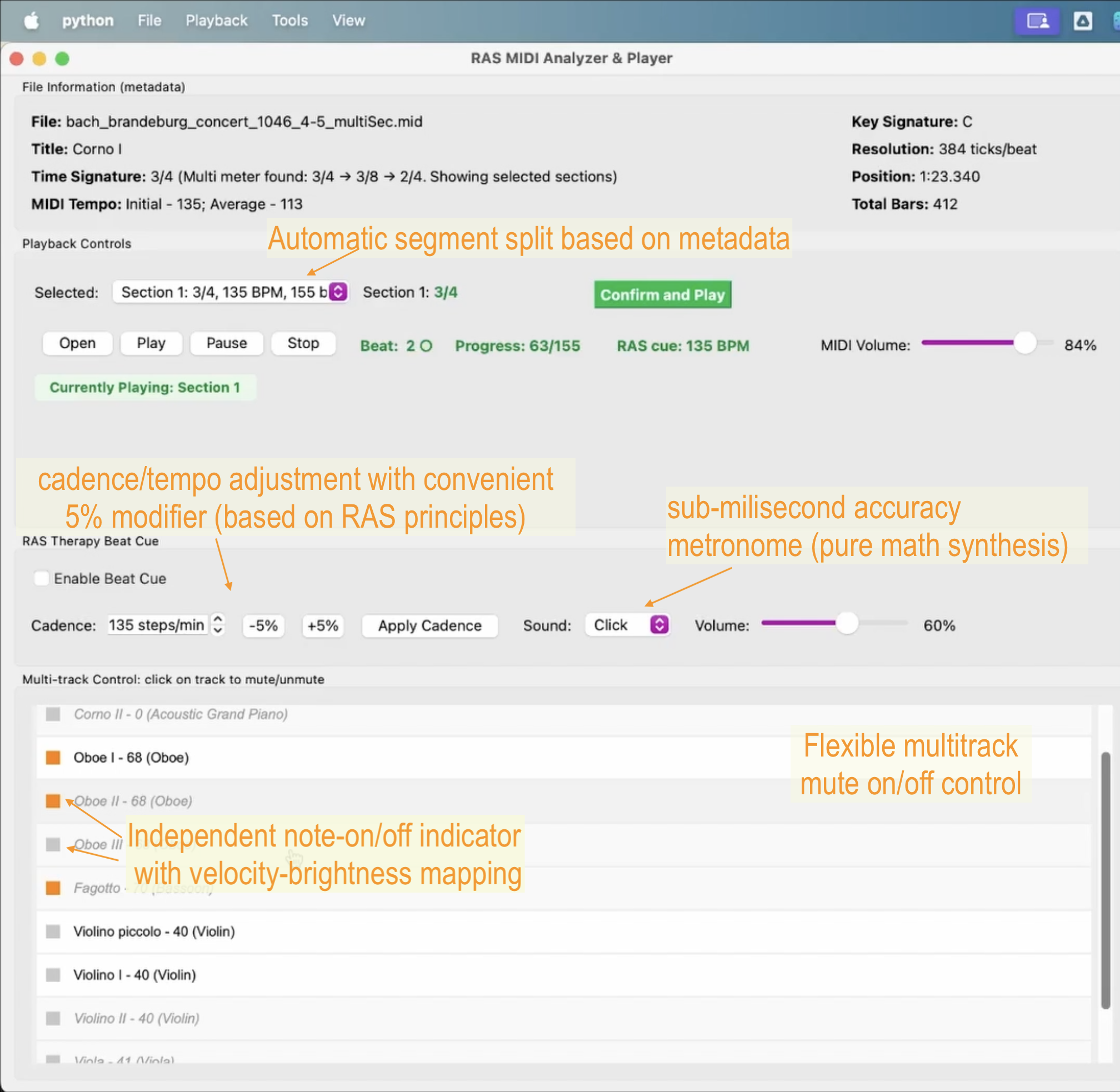

This project is a specialized platform designed to support clinicians working with Rhythmic Auditory Stimulation (RAS) therapy for gait rehabilitation. RAS is mainly applied as an alternative rehab method for patients with Parkinson's disease, stroke, and other movement disorders. At its heart, the system tackles a fundamental challenge in music therapy: identifying which pieces of music are actually suitable for therapeutic use. While any MIDI player can produce sound, this platform goes deeper by analyzing the rhythmic structure of music across multiple dimensions, including beat density, predictability, accent patterns, and rhythmic uniformity. This is expected to help therapists understand whether a piece will support or hinder motor entrainment. To categorize music into clinically meaningful groups (duple vs. triple meter), a dual-layer meter classification system was designed. It uses both MIDI pattern analysis and neural network-based audio processing (RNN+DBN). Beyond analysis, the platform provides microsecond-accurate playback with an integrated therapy metronome that can be precisely synchronized to music, supporting cadences from 20 to 180 for gait training. Smart timing correction is another small tool which handles common MIDI quantization error (shifting from meter grid). The interface remains deliberately simple—resembling a straightforward MIDI player—but surfaces sophisticated features only when needed.

Key Features

- High-Precision Playback: Microsecond-accurate MIDI synthesis with FluidSynth and real-time metronome synchronization

- 4D Rhythm Analysis: Quantifies musical rhythm across Beat Density, Predictability, Beat Salience, and Rhythmic Uniformity dimensions

- Smart Timing Correction: Pattern-based downbeat detection with neural network validation and MIDI event adjustment

- Batch Processing: Process-isolated analysis with dual-layer meter classification (MIDI + audio) and persistent caching

- RAS Therapy Tools: Direct cadence control (20-180 steps/min), unified downbeat cueing, and clinical gait training optimization

- Advanced Architecture: SQLite caching, atomic IPC, CLI automation, and cross-platform compatibility

Author's notes

This project is a refined successor to an ambitious early concept. The initial motivation came from my basic training in Neurologic Music Therapy last year(2024). My first idea was to simulate a complete RAS therapy session, including gait detection via cutting-edge computer vision technology like MediaPipe Pose (https://github.com/tellmeayu/RAS-helper.git). However, given the technical complexity and resource limitation of a solo developer, I made the strategic decision to narrow down the scope. This allows me to focus on my own major and allocate all development efforts toward mastering the platform's rhythm analysis core.

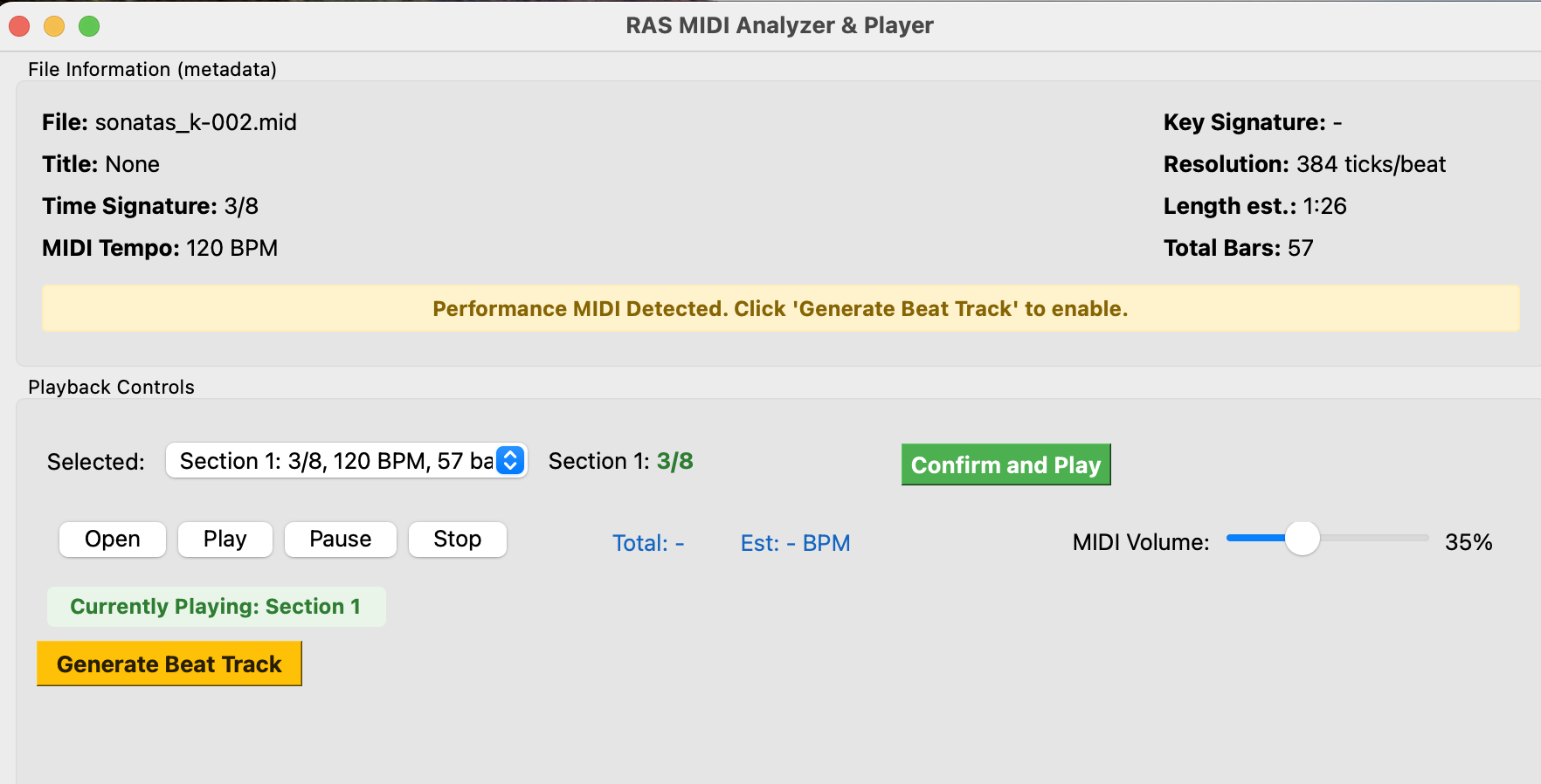

Playback basic

It just looks like a simple MIDI player plus a tempo/cadence control! I designed the front-end to be clean and intuitive, focusing purely on the basic operation. All the heavy lifting is working silently behind the scenes, only surfacing data when specifically requested or automatically detected. I deliberately kept the UI minimal.

Once you open a MIDI:

If a loaded MIDI lacks sufficient metadata (e.g. Type 0), the system automatically enter an assisted analysis mode:

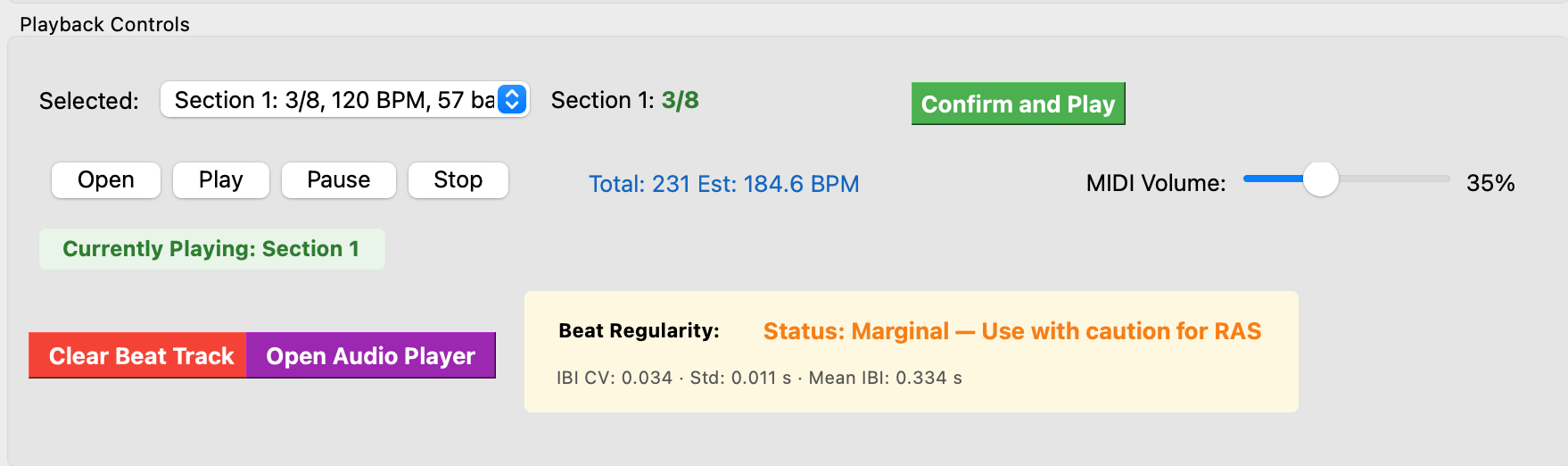

An additional audio player is provided, which features the music mixed with a precisely synchronized metronome cue for training purposes. More importantly, once the beat tracking finished, system will immediately calculate Beat Regularity, measuring if it's suitable for RAS gait training. The standard set here is rather strict considering the training purpose.

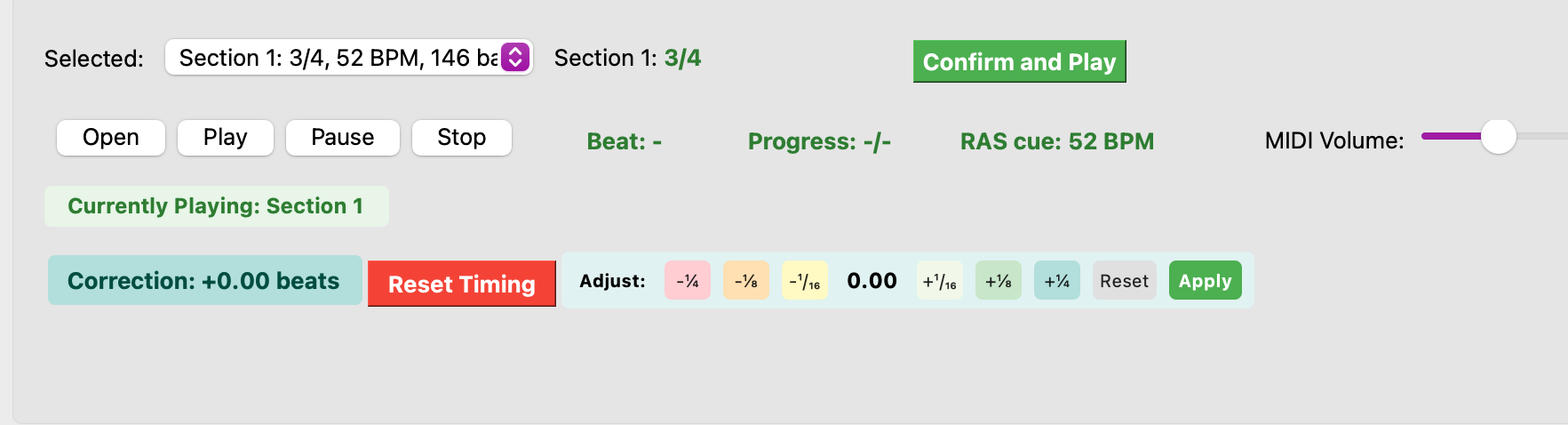

If a standard MIDI exhibits slight quantization shift that prevents metronome alignment, the "Timing Correction" tool is engaged. Once you applied the correction:

It calculates the optimal downbeat shift and applies it automatically. However, given the abstract and complex nature of musical rhythm, the algorithm output isn't always precise. Therefore, a manual fine-tuning controls are provided for human validation.

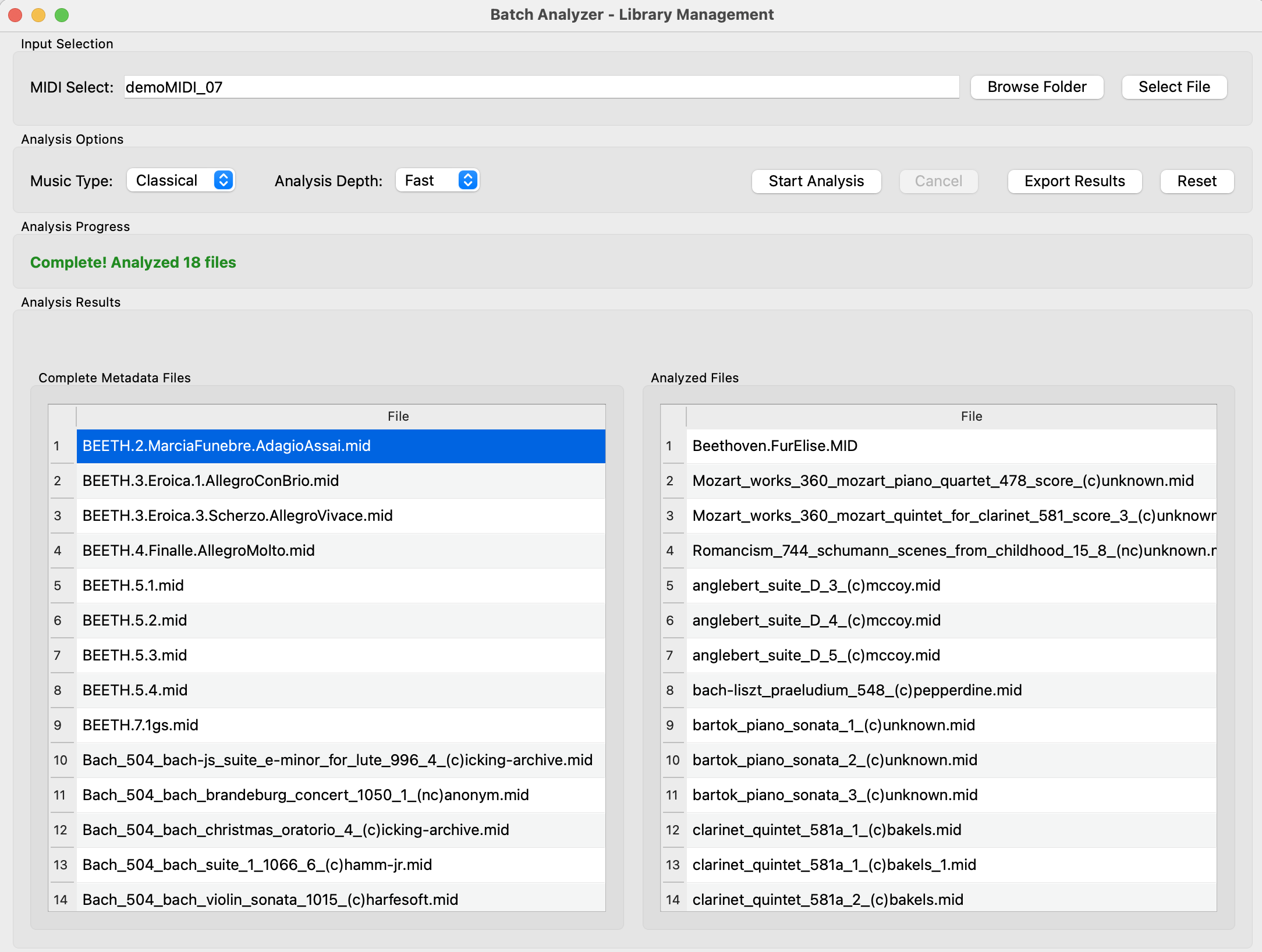

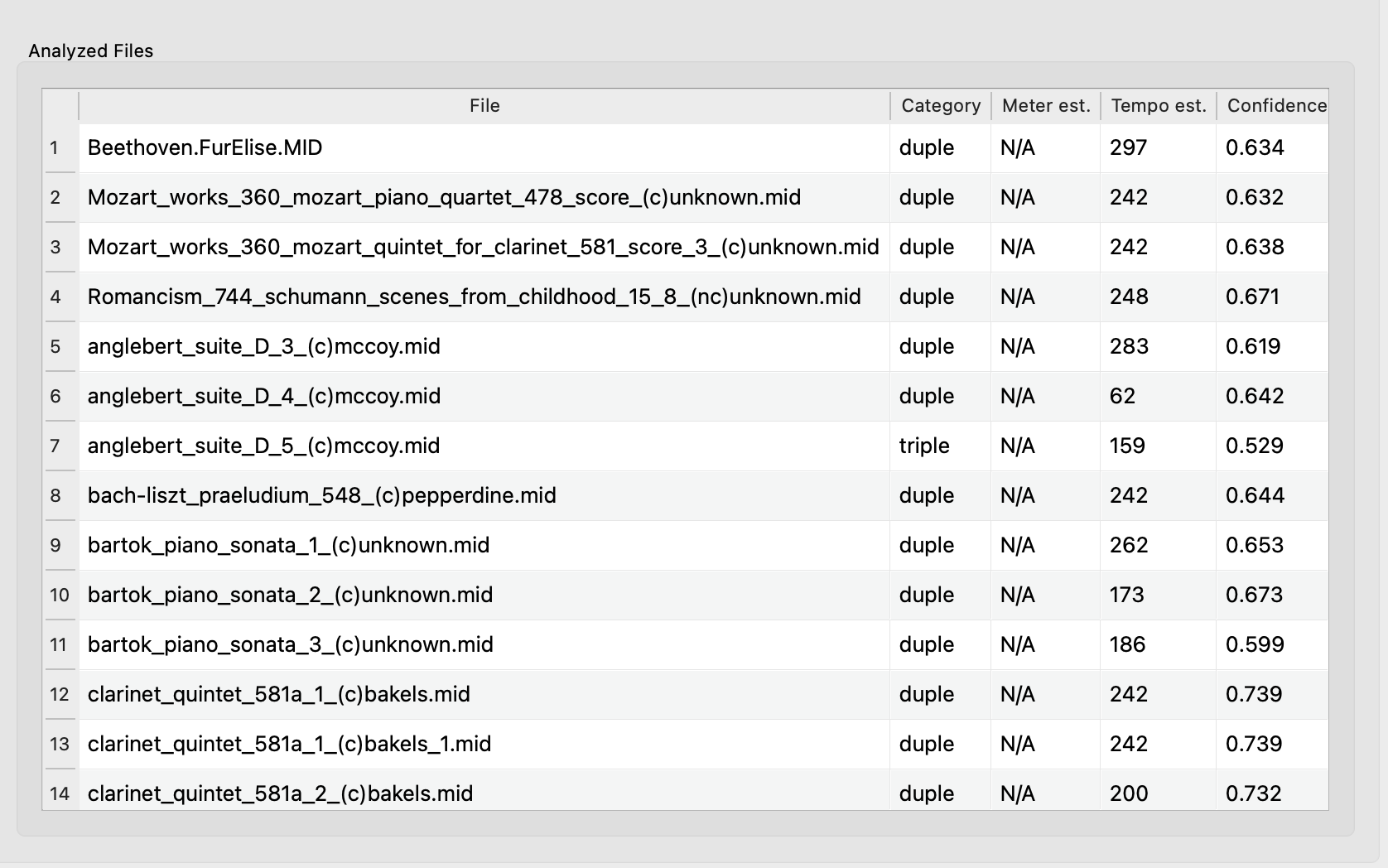

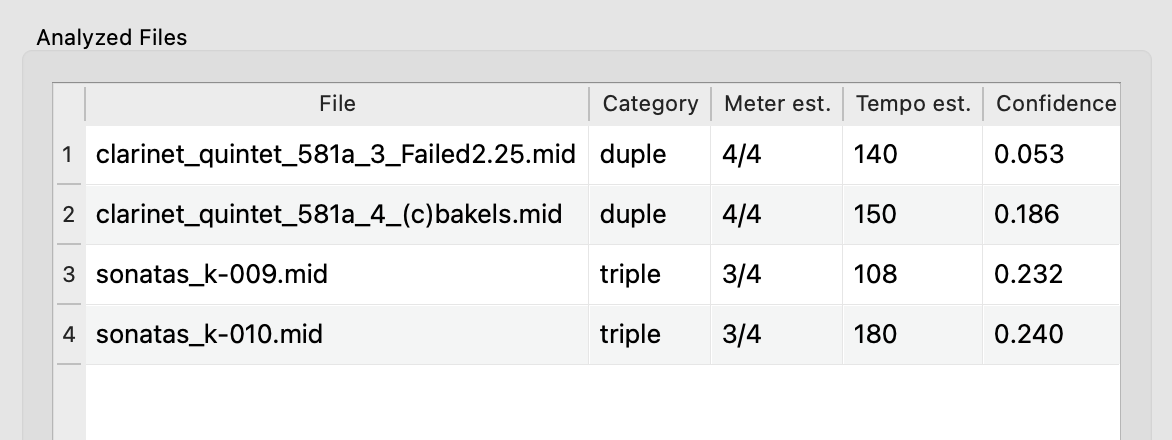

Batch Analysis for Meter Categorization

The goal of meter estimation here is not to "guess" the time signature, but to robustly categorize the music into "duple" or "triple" classes based on its perceived "meter feeling" (critical for RAS). There are two analysis modes. The fast mode performs analysis purely on MIDI data without giving meter results. The Deep Mode, conversely, provides a probabilistic meter category, though only for reference. The algorithm uses a "validation strategy" to iterate possibilities. For macro-structural analysis, the system currently limits its scope to the most common regular meter types (2/4, 3/4, 4/4), focusing on macro-level duple/triple distinction rather than full time-signature identification.

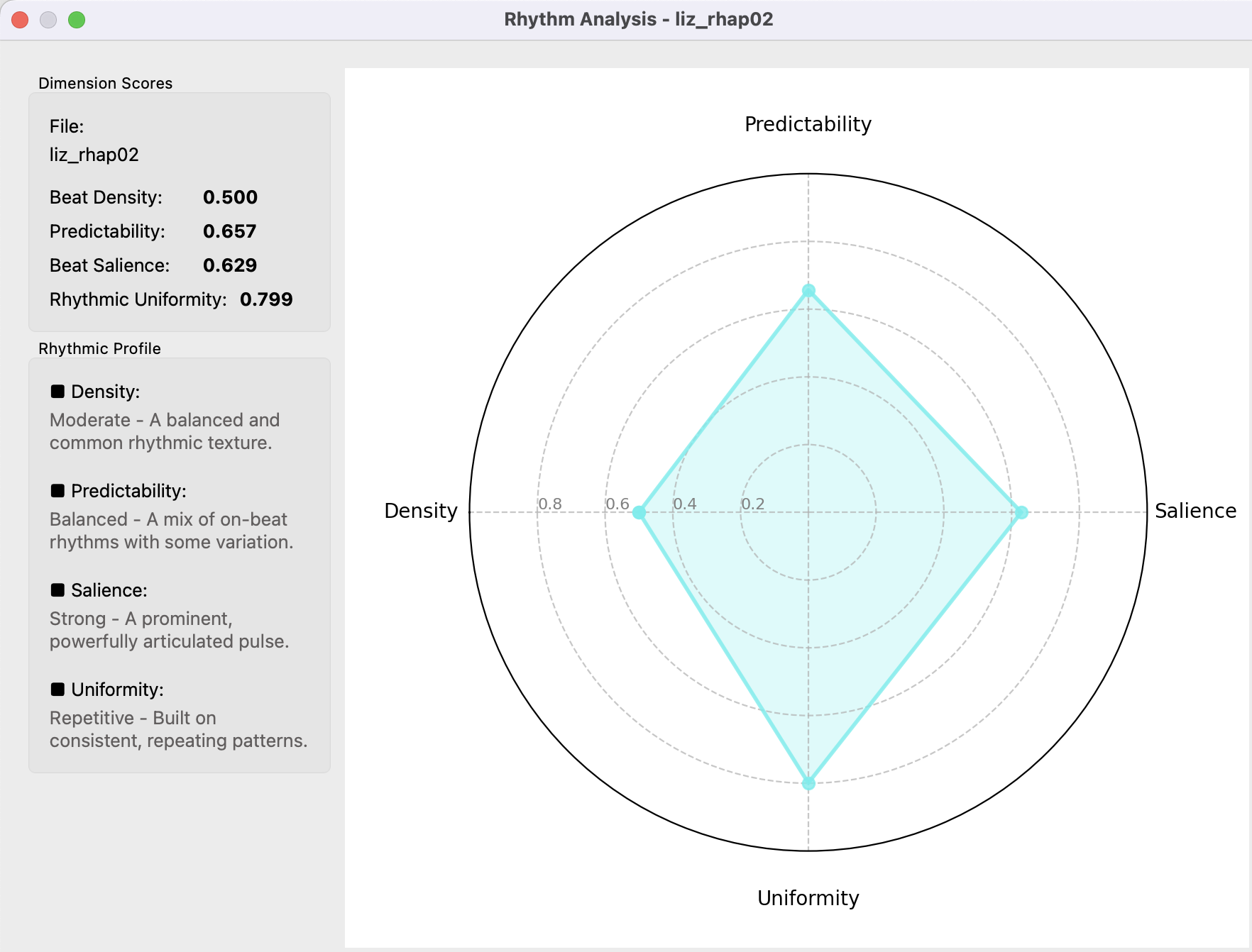

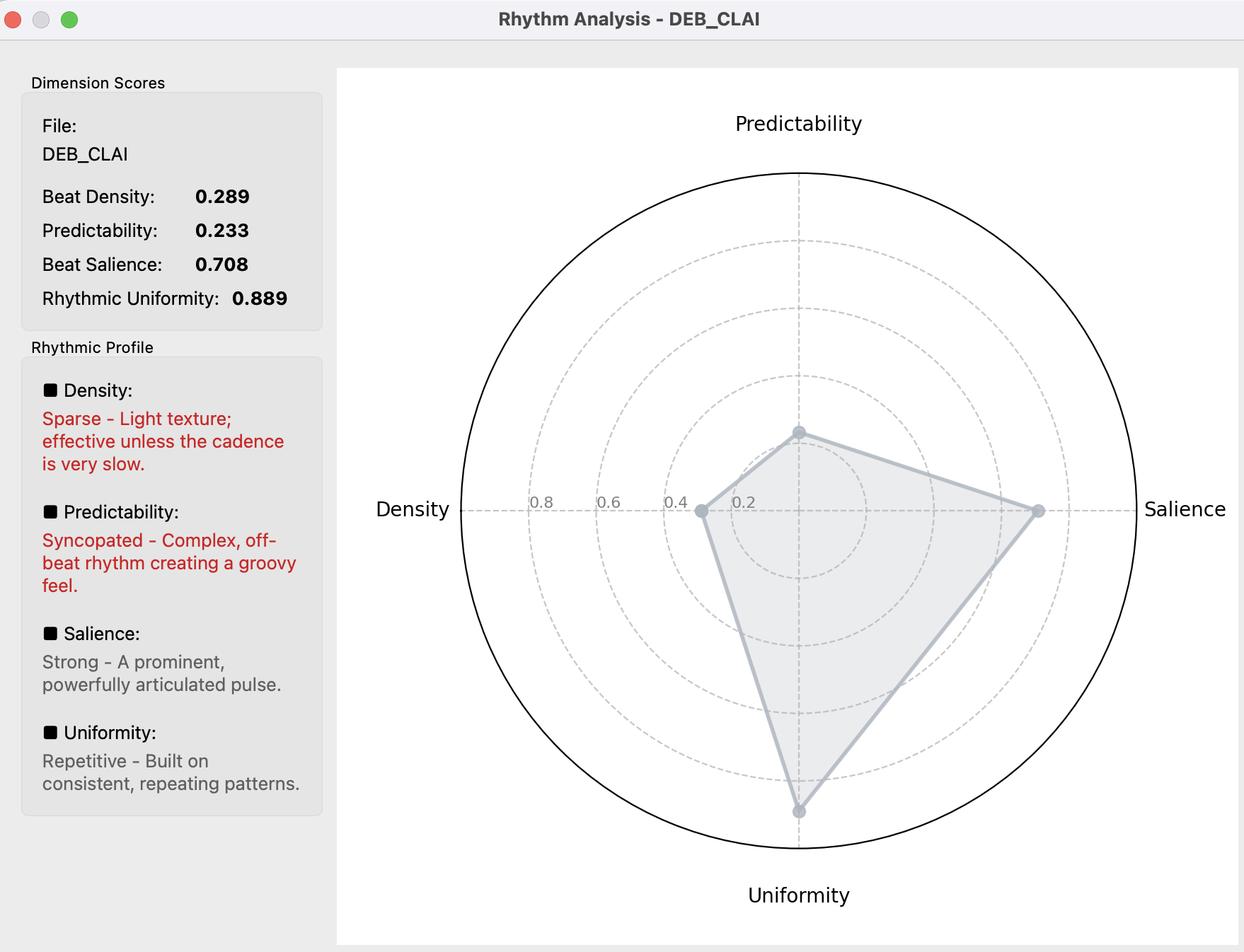

4-dim Rhythm Analyzer with Radar Chart Visualization

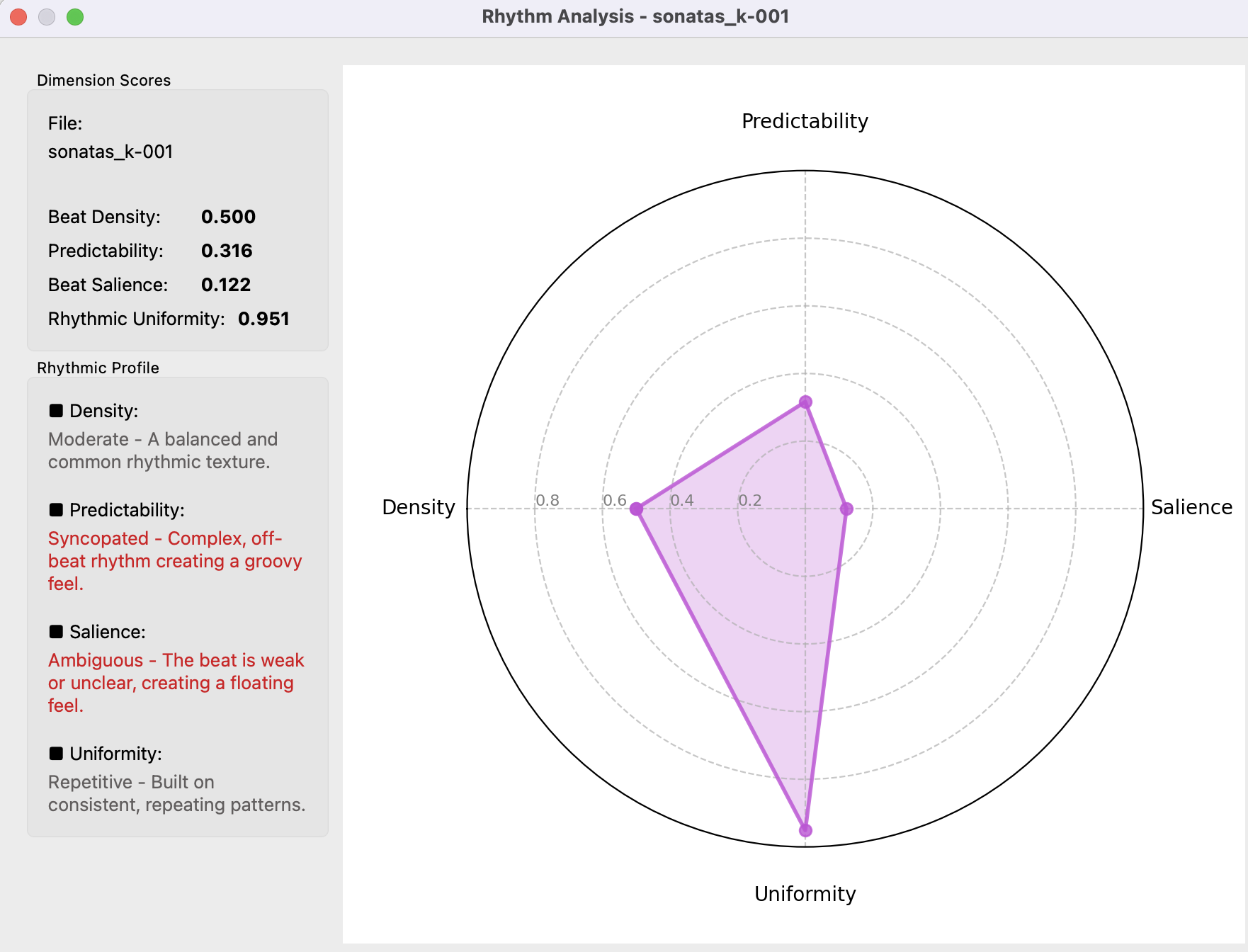

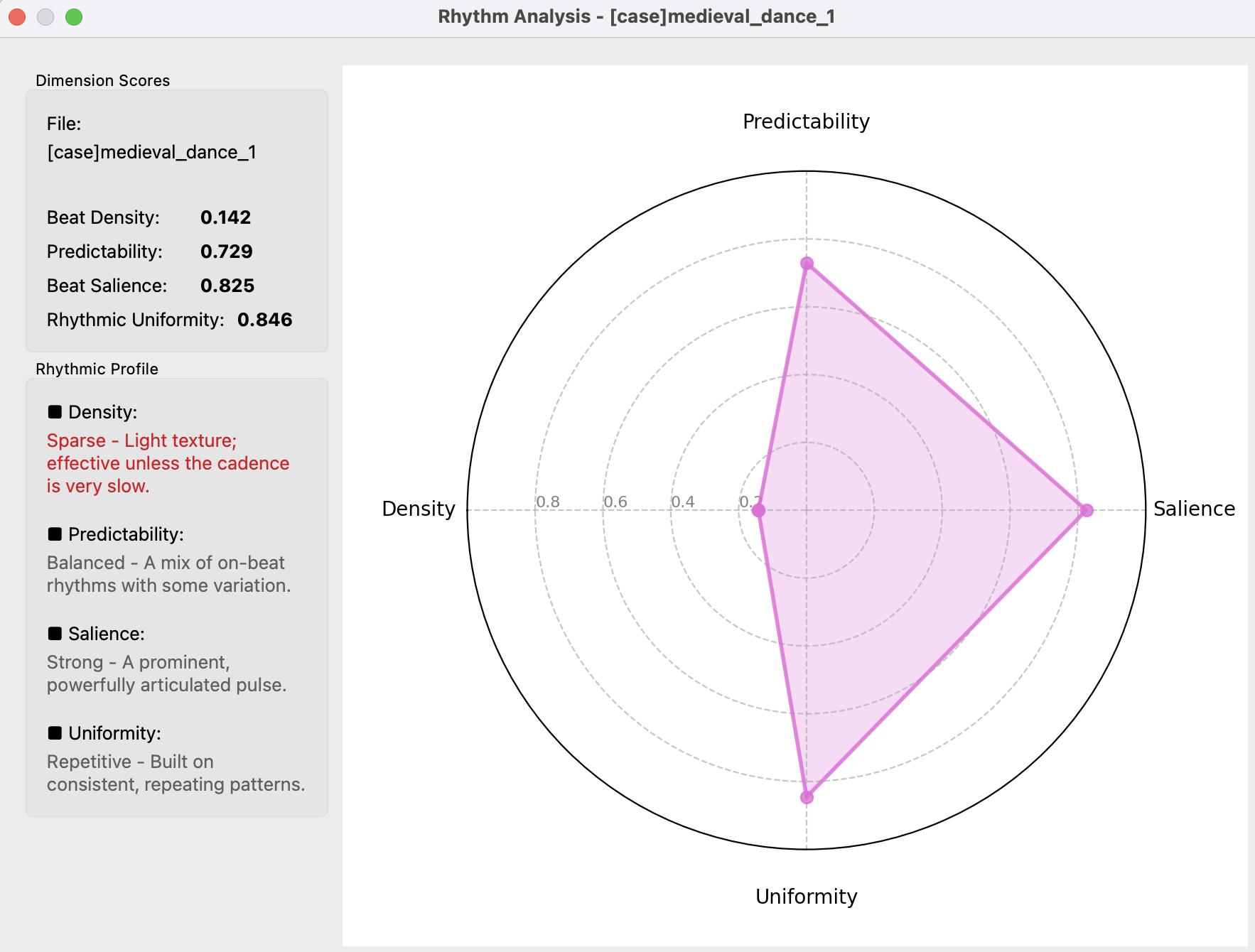

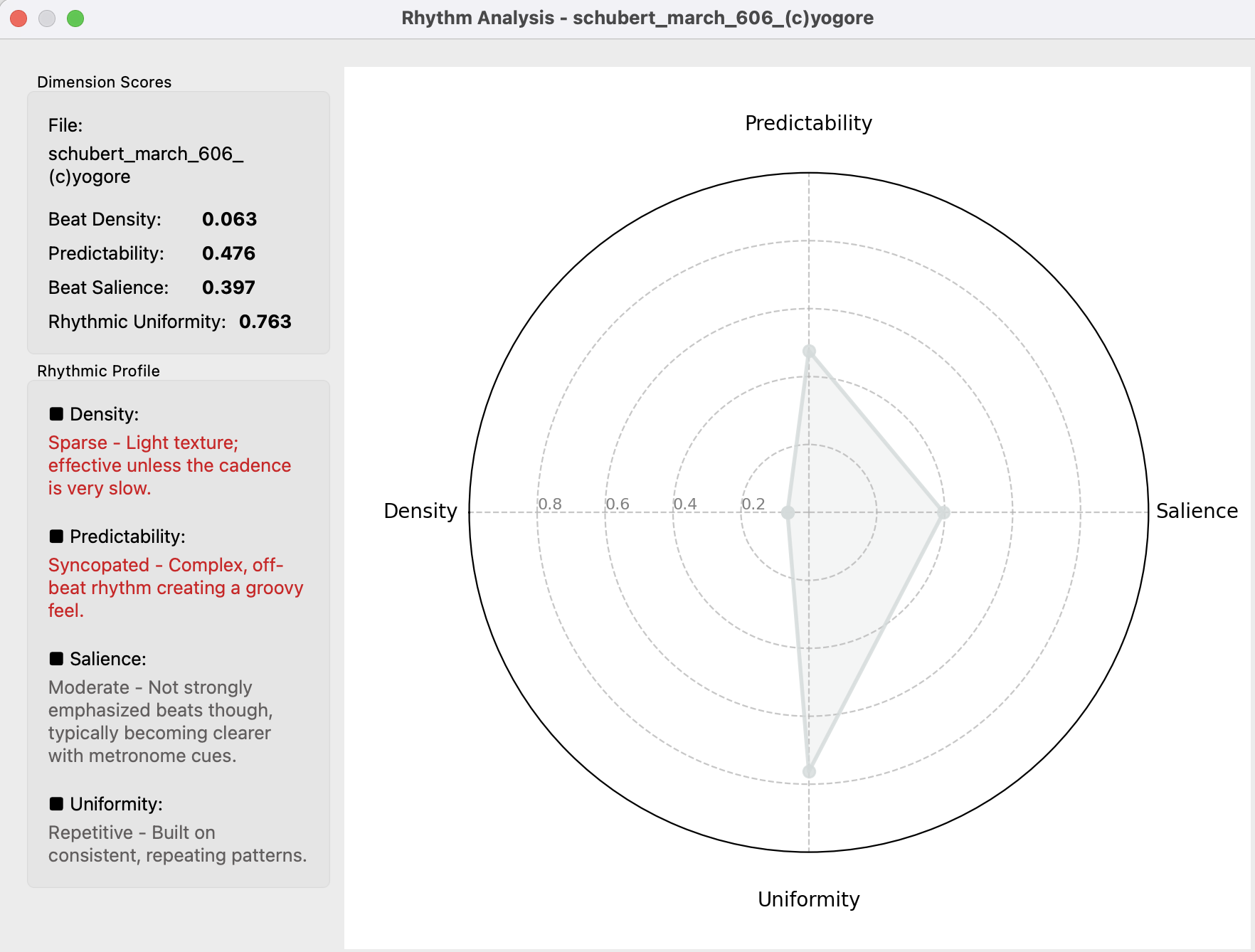

The 4-D analysis result is visualized on a Radar Chart (with randomly assigned colors). This visualization allows for immediate, comparative assessment of the music's rhythmic profile.

For instance, Debussy's Clair de Lune serves as an extreme example. Its low Predictability score suggests that the rhythm is likely too ambiguous for a listener to reliably tap or walk along with:

A Scarlatti keyboard sonata may also be difficult to follow, but due to a different profile, such as extremely low Beat Salience (a "flat" or non-accented rhythmic feel):

On the contrary, some music sounds quite "on-beat", that usually indicates a good candidate used for RAS gait training:

Low note density is also a critical factor need to be considered, especially when matched with a patient's low cadence. Insufficient rhythmic information can lead to perceptive or cognitive processing failure rather than rhythmic entrainment.

Conversely, music with high speed and high density provides information that is too cognitively "dense" to process. This complexity can decrease the patient's perceived "joyfulness" or impair the brain's ability to encode the signals into a coherent "musical line".

This model, while effective as a first stage, is far from perfect. I believe there is significant room for improvement across its feature set, underlying algorithms, and dimensionality.

Algorithms

4-dim Model

Dimension I: Beat Density

-

Objective: To measure the "busyness" or textural crowdedness of the music relative to its underlying pulse. This metric is independent of absolute tempo (BPM).

-

Metric Definition: The average number of note onsets per beat (Notes Per Beat, NPB).

-

Conceptual Implementation:

The process involves two fundamental counts: the total number of musical events (notes) and the total number of beats within the piece. The ratio of these two counts yields the Beat Density. -

Algorithm & Formulas:

Let $\mathcal{N}$ be the set of all note onsets in the piece. The total number of notes is the cardinality of this set, $|\mathcal{N}|$.

Let $\mathcal{B}$ be the set of all detected beat events. The total number of beats is $|\mathcal{B}|$.The Beat Density is calculated as:

$$\text{BeatDensity} = \frac{|\mathcal{N}|}{|\mathcal{B}|}$$

Dimension II: Predictability

-

Objective: To quantify how well the rhythm conforms to metrical expectations through a two-layer analysis: macro-level beat coverage and micro-level weighted alignment.

-

Metric Definition: A weighted combination of beat coverage (macro) and metrical alignment (micro).

-

Conceptual Implementation:

This employs a dual-layer approach. The macro layer assesses overall beat coverage (binary presence/absence), while the micro layer evaluates fine-grained alignment with metrical weights. The final score fuses both layers with configurable weights. -

Algorithm & Formulas:

-

Preprocessing: Extract note onsets, filter short notes (ornaments), generate musical beat grid.

-

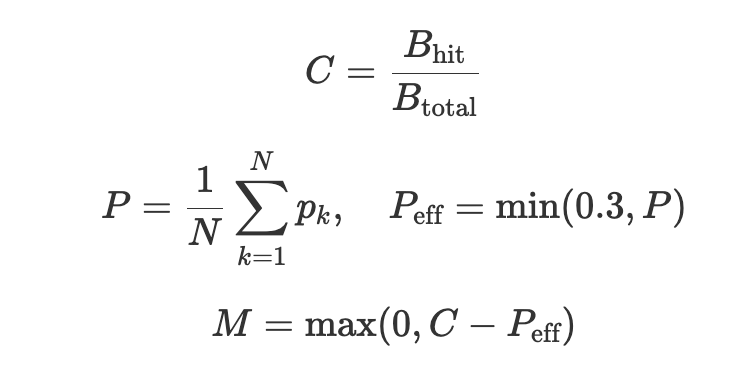

Macro Layer - Beat Coverage: Measures percentage of beats with aligned notes.

- Calculate dynamic tolerance based on musical tempo (1/4 beat duration)

- For each beat: check if any note falls within tolerance

- Compute coverage ratio:

beats_with_notes / total_beats - Apply off-beat penalties for notes outside tolerance (max 30% reduction)

-

Micro Layer - Weighted Alignment: Measures alignment quality with metrical positions.

- For each bar: quantize notes to 16th-note grid

- Compute metrical weights for each grid position

- Calculate alignment score: sum of weights at note positions

- Normalize by maximum possible alignment

-

Final Fusion: Weighted combination of macro and micro scores.

$$ S = w_m \cdot M + (1 - w_m) \cdot m $$

where:- (C) is beat coverage (fraction of beats with at least one aligned note),

- (P) is the average off-beat penalty per note and (P_{\text{eff}}) is the clamped penalty,

- (M) is the macro-layer score,

- (m) is the micro-layer score,

- (S) is the final predictability score,

- (w_m) is the macro weight (default 0.8).

-

Dimension III: Beat Salience

-

Objective: To measure how perceptually prominent beat events are relative to their immediate temporal neighborhood. A high salience score indicates that beats stand out clearly from surrounding off-beat events, while a low score suggests beats blend in with the overall rhythmic texture.

-

Metric Definition: The average ratio of onset energy at beat positions compared to surrounding off-beat regions, normalized to [0, 1].

-

Conceptual Implementation:

This analysis operates exclusively on symbolic MIDI data, eliminating the need for audio synthesis. The core principle is local contrast analysis: comparing the onset energy at beat positions against the average onset energy in the surrounding off-beat regions within a temporal window. -

Algorithm & Formulas:

-

Extract deterministic beat locations from MIDI tempo and time signature metadata to generate a beat grid.

-

Compute a frame-based onset strength envelope from the MIDI piano-roll representation, where each frame's energy is calculated as the sum of note velocities (or binary onsets) across all pitches.

-

For each beat position $b$, define a temporal window $W(b) = [b - \Delta, b + \Delta]$ and extract off-beat frames within this window, excluding the beat frame itself and a small exclusion radius.

-

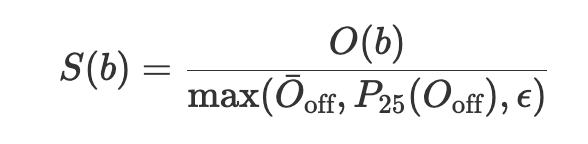

Compute the salience ratio for each beat:

where $O(b)$ is the onset energy at beat $b$, $ \bar{O} $ is the mean energy, $P_{25}$ is the 25th percentile. $\epsilon$ is used to prevent division by zero.

-

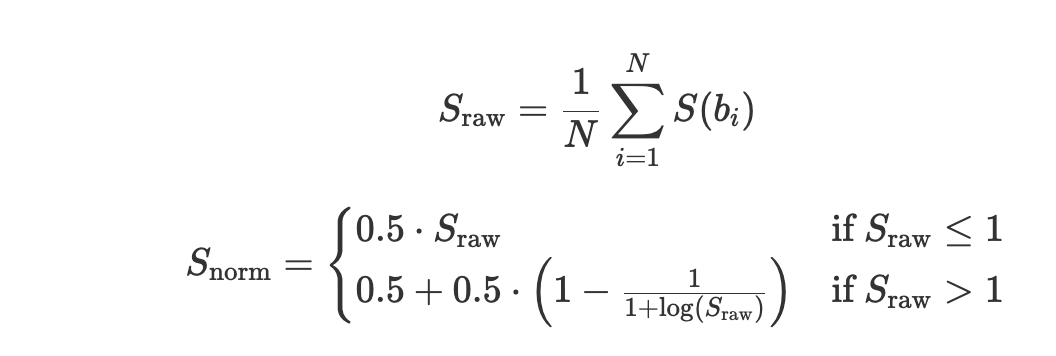

Calculate the raw salience score as the mean of all individual beat saliences, then normalize using sigmoid compression to map to [0, 1].

A higher salience score indicates beats that stand out more prominently from their temporal context.

-

Dimension IV: Rhythmic Uniformity

-

Objective: To measure the degree of consistency in the durations of rhythmic events. A high uniformity score indicates that the rhythm is constructed from a small set of recurring time intervals (e.g., steady eighth notes), while a low score suggests a wide and unpredictable variety of durations.

-

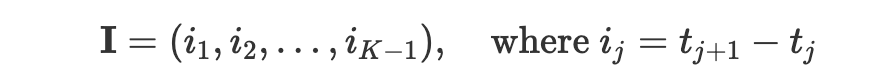

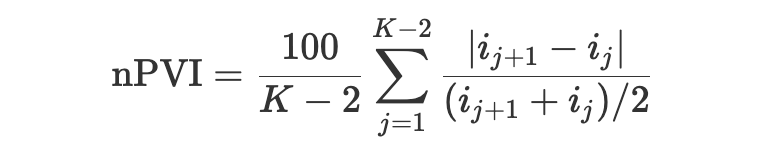

Metric Definition: An inverse measure of the Normalized Pairwise Variability Index (nPVI).

-

Conceptual Implementation:

This dimension is calculated by analyzing the sequence of time intervals between consecutive note onsets (Inter-Onset Intervals, or IOIs). The nPVI quantifies the average normalized difference between successive intervals. -

Algorithm & Formulas:

-

Extract the time series of all note onsets, $\mathcal{T} = (t_1, t_2, \dots, t_K)$, sorted chronologically.

-

Compute the sequence of Inter-Onset Intervals (IOIs), $\mathbf{I}$:

-

The nPVI is calculated as the average of the absolute difference between each pair of successive intervals, normalized by their sum.

-

The Rhythmic Uniformity score is an inverse function of the nPVI. An exponential decay function is suitable for mapping the unbounded nPVI score to a [0, 1] range.

$$ \text{Uniformity} = \exp(-k \cdot \text{nPVI}) $$

where $k$ is a small scaling constant (e.g., $k=0.05$) to be tuned empirically for a desirable score distribution.

-

Batch Analysis (meter classification)

The batch analysis subsystem is basically a hybrid framework integrates symbol-domain (MIDI-based) and audio-domain analysis to balance computational efficiency and precision. It operates in two layers: a rapid screening layer for quick meter classification (duple vs. triple) and a deep analysis layer for detailed beat structure identification. This design addresses the core challenge of RAS music selection—efficiently filtering large music libraries.

Rapid Screening Layer (fast mode)

The process is an ensemble analysis combining Fourier tempogram and autocorrelation function (ACF) methods, followed by decision fusion.

1. MIDI Preprocessing

- Load MIDI files using libraries like

pretty_midiand merge all instrument tracks into a unified symbolic sequence to capture global rhythmic information. - Trim leading silence by detecting the first note onset and shifting the timeline to start at time zero, ensuring consistent periodicity analysis.

2. Onset Envelope Construction

- Convert discrete MIDI note events into a continuous onset strength envelope $o(t)$ for temporal analysis.

- Use chroma flux: Compute a 12-dimensional chroma energy vector $C(t)$ (energy per pitch class at time $t$), then derive $o(t)$ as the positive differences across chroma bins.

- Mathematically: $o[k] = \sum_{i=0}^{11} \max(0, C_i[k] - C_i[k-1])$, sampled at high resolution (e.g., 200 Hz) to preserve timing accuracy.

3. Tempogram-Based Scoring (Fourier Tempogram)

- Apply Short-Time Fourier Transform (STFT) to $o(t)$ to generate the Fourier tempogram $T(f, t)$, where $f$ represents tempo (BPM) and $t$ represents time.

- Average across time to obtain the global tempogram $\bar{T}(f)$, identifying multiple dominant tempos (up to three peaks) for robustness.

- Score harmonic relationships: For duple meters, evaluate energy at tempo multiples like ${0.5\beta, \beta, 2\beta, 4\beta}$; for triple meters, at ${1.5\beta, 3\beta, 6\beta}$, where $\beta$ is a dominant tempo.

- Compute duple energy and triple energy from integrated energy around these harmonics.

4. Autocorrelation-Based Scoring

- Compute the autocorrelation function $R(\tau)$ of $o(t)$: $R(\tau) = \int o(t) \cdot o(t+\tau) \, dt$.

- Using the primary dominant tempo $\beta$, predict cycle periods (e.g., $2T_0, 4T_0$ for duple, $3T_0, 6T_0$ for triple, where $T_0 = 60/\beta$).

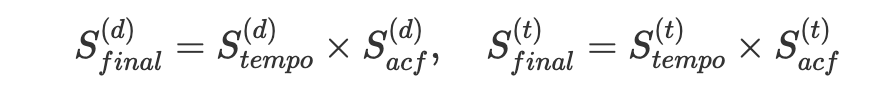

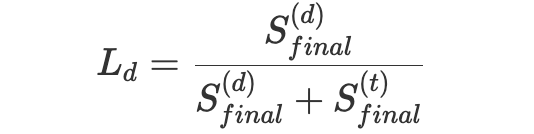

- Score by peak magnitudes at these lags, yielding $S{acf}^{(d)}$ and $S{acf}^{(t)}$.

5. Decision Fusion

-

Fuse scores multiplicatively:

-

Compute duple likelihood:

classifying as duple if $L_d > 0.55$ (threshold tunable for RAS alignment).

Deep Analysis Layer (deep mode)

The deep analysis layer synthesizes MIDI to audio for perceptual accuracy. It employs neural networks and pattern validation to locate beats and downbeats.

1. Audio Processing and Activation Computation

- Synthesize MIDI to temporary WAV using a preprocessor pipeline, selecting duration based on music type (20s for popular, 30s for classical) and optionally including drums.

- Create audio signal object for NN process.

- Compute joint beat and downbeat activations using a pre-trained RNN (RNNDownBeatProcessor): Outputs a 2D array where the first column represents beat activation and the second column represents downbeat activation.

2. Meter Hypothesis Testing via Dynamic Bayesian Networks

- Test multiple meter assumptions using Dynamic Bayesian Networks (DBN) for each hypothesis.

- For each meter, decode the optimal beat sequence from activations: DBN (DBNDownBeatTrackingProcessor) outputs an array of (time, beat_number) pairs, where beat_number indicates position within the measure (1 for downbeats).

- Extract downbeat positions (beat_number == 1) and compute raw confidence as the mean downbeat activation at these predicted downbeat times.

3. Confidence Scoring and Meter Selection

- Calculate enhanced confidence using a relative scoring model: Combine the best raw score (80% weight), relative margin over the second-best score (15% weight), and normalized score dispersion across hypotheses (5% weight).

- Select the meter with the highest raw confidence as the estimated meter; return both raw and enhanced confidence scores, plus detailed beat sequences for each hypothesis.

Timing correction

Workflow: Audio Preprocess -> Beat Tracking -> Downbeat Probability Estimate -> Pattern-Based Validation -> Post-Processing

1. Audio Synthesis and Preprocessing

- Synthesizes a 15–20 second audio clip from the MIDI file

- Applies a 100ms fade-in to reduce onset artifacts

- Detects the first note onset to filter "ghost beats" before actual music starts

2. Feature Extraction

Stage 1: Beat Tracking

- Uses madmom's RNNBeatProcessor to extract beat activation functions

- Applies DBNBeatTrackingProcessor to infer stable beat times

- Estimates tempo to adjust the search window

Stage 2: Downbeat Probability Analysis

- Uses RNNBarProcessor to compute downbeat probabilities at each beat

- Calculates RMS energy and normalizes to [0, 1]

- Combines features into a 3-column array:

[time, downbeat_probability, rms_energy]

3. Pattern-Based Validation (Core Algorithm)

Step 1: Candidate Selection

- Filters candidates using a dynamic threshold:

max(max_prob × 0.4, 0.1) - Selects top-3 candidates by probability

- Handles NaN values and missing data

Step 2: Pattern Hypothesis Testing

For each candidate:

- Generates expected downbeat positions:

candidate + i × meter_valuefor i = 0, 1, 2, 3, 4 - Validates whether these positions show consistent downbeat characteristics

Step 3: Consistency Score Calculation

Two modes based on data availability:

If Sufficient Data:

- Computes madmom consistency:

mean_probability × (1 - min(std_deviation, 0.5)) - Computes mean RMS energy at expected positions

- Combined score:

0.7 × madmom_consistency + 0.3 × mean_rms

Else if Insufficient Data:

- Falls back to single-point scoring

- Combined score:

0.9 × madmom_probability + 0.1 × rms_energy

Step 4: Position Penalty

- Applies penalty:

1 - (candidate_index / search_window) × 0.2 - Reduces preference for early candidates with less validation context

Step 5: Final Selection

- Total score:

consistency_score × position_penalty - Selects candidate with highest total score

4. Post-Processing

Confidence Thresholding

- If confidence < 0.1, assumes no anacrusis (returns index 0)

Earliest Position Adjustment

- Adjusts to earliest position within the first measure:

earliest_idx = selected_idx % meter_value - Prevents selecting a downbeat from a later measure

Architecture

The system implements a modular, process-isolated architecture.

Folder Structure

src/

├── main.py # Application entry point

├── __init__.py # Package initialization

├── core/ # Core playback engine

│ ├── __init__.py

│ ├── midi_engine.py # MIDI synthesis and playback engine

│ ├── precision_timer.py # High-precision timing system

│ ├── event_scheduler.py # Real-time event scheduling

│ ├── metronome.py # Audio metronome implementation

│ ├── ras_therapy_metronome.py # RAS-optimized metronome

│ ├── player_session_state.py # Session state management

│ ├── playback_mode.py # Playback mode definitions

│ ├── beat_timeline.py # Beat position management

│ ├── track_activity_monitor.py # Track visualization data

│ ├── midi_metadata.py # MIDI metadata handling

│ ├── gm_instruments.py # General MIDI instrument definitions

│ └── audio_cache.py # Audio file caching system

├── analysis/ # Musical analysis toolkit

│ ├── __init__.py

│ ├── anacrusis_detector.py # First downbeat detection

│ ├── beat_tracker_basic.py # Basic beat tracking

│ ├── beat_tracking_service.py # Beat tracking service layer

│ ├── preprocessor.py # MIDI preprocessing utilities

├── batch_filter/ # Batch processing system

│ ├── __init__.py

│ ├── core/ # Core analysis algorithms

│ │ ├── __init__.py

│ │ ├── batch_processor.py # Multi-stage batch processor

│ │ ├── tempogram_analyzer.py # MIDI-based rhythm analysis

│ │ └── meter_estimator.py # Audio-based meter estimation

│ ├── cli/ # Command-line interface

│ │ ├── __init__.py

│ │ └── batch_analyzer_cli.py # CLI subprocess implementation

│ ├── ui/ # Batch analysis UI

│ │ ├── __init__.py

│ │ └── batch_analyzer_window.py # Independent analysis window

│ └── cache/ # Caching layer

│ ├── __init__.py

│ └── library_manager.py # SQLite-based result caching

├── multi_dim_analyzer/ # 4D rhythm analysis framework

│ ├── __init__.py

│ ├── config.py # Analysis configuration

│ ├── pipeline.py # Main analysis orchestration

│ ├── plotting.py # Visualization utilities

│ ├── beat_density.py # Dimension I: Beat density analysis

│ ├── predictability.py # Dimension II: Metrical predictability

│ ├── beat_salience.py # Dimension III: Beat salience

│ ├── rhythmic_uniformity.py # Dimension IV: Rhythmic uniformity

│ └── utils/ # Analysis utilities

│ ├── __init__.py

│ ├── beat_grid.py # Beat grid generation

│ └── midi_processor.py # MIDI processing utilities

└── ui/ # User interface components

├── __init__.py

├── gui.py # Main application window

├── playback_controls.py # Playback control widgets

├── track_visualization.py # Real-time track visualization

├── dialogs.py # Dialog windows

├── analysis_dialogs.py # Analysis-related dialogs

├── menu_manager.py # Menu system management

├── utilities.py # UI utility functions

├── file_info_display.py # File information display

├── anacrusis_tool_window.py # Timing correction tool window

├── rhythm_analysis_dialog.py # Rhythm analysis interface

├── rhythm_analysis_worker.py # Analysis worker thread

├── audio_player_launcher.py # Audio player launch utilities

├── audio_player_window.py # Audio player interface

└── beat_tracking_worker.py # Beat tracking background workerCore Components

1. Playback Engine (src/core/)

- MidiEngine: Singleton FluidSynth-based MIDI synthesis with thread-safe audio management

- Precision Timer: Performance counter-based timing system achieving ±0.1ms accuracy

- Event Scheduler: Real-time MIDI event processing with timing offset correction

- RAS Metronome: Clinical-grade metronome with unified downbeat cueing for therapy sessions

2. Analysis Framework (src/analysis/)

- AnacrusisDetector: Pattern-based downbeat detection using madmom RNN analysis

- MidiBeatTracker: Audio synthesis-based beat tracking with tempo estimation

- Preprocessor: MIDI file preparation pipeline (loading, merging, trimming, synthesis)

3. Batch Processing System (src/batch_filter/)

- Process Isolation: Independent subprocess execution preventing UI blocking

- Dual-Layer Classification:

- MIDI-based tempogram analysis for fast duple/triple feel detection

- Audio-synthesized madmom RNN analysis for precise meter estimation

- SQLite Caching: Persistent result storage with MD5-based cache validation

- CLI Automation: Headless batch processing with JSON IPC and progress reporting

4. 4D Rhythm Analysis (src/multi_dim_analyzer/)

- AnalysisPipeline: Orchestrates four-dimensional rhythm quantification

- Dimension I - Beat Density: Notes-per-beat ratio analysis using sigmoid normalization

- Dimension II - Predictability: Metrical conformance via syncopation indexing

- Dimension III - Beat Salience: Perceptual prominence of beat positions

- Dimension IV - Rhythmic Uniformity: Inter-onset interval consistency analysis

5. User Interface (src/ui/)

- Main Player Window: Real-time playback controls with RAS therapy interface

- Batch Analyzer Window: Independent QMainWindow for large-scale MIDI processing

- Tool Windows: Detached analysis tools with IPC-based result communication

Technical Specifications

Performance Metrics

- Timing Precision: ±0.1ms beat synchronization with <0.01% tempo drift over 60 minutes

- Memory Management: Separated UI components preventing memory leaks

- Process Isolation: Subprocess crash containment with atomic JSON IPC

- Batch Processing: ~1-2 seconds per MIDI file with 80%+ cache hit rates

Audio Processing Pipeline

- MIDI Loading: PrettyMIDI-based parsing with instrument consolidation

- Audio Synthesis: FluidSynth rendering with configurable SoundFont support

- Feature Extraction: madmom RNN analysis for beat/downbeat probability estimation

- Pattern Validation: Multi-candidate evaluation with consistency scoring

Data Management

- SQLite Caching: Hash-based validation avoiding redundant analysis

- JSON IPC: Atomic file-based inter-process communication

- CSV Export: Structured data export for research applications

Key Engineering Innovations

1. High-Precision Timing System

Implements a multi-layered timing architecture:

- Performance counter-based event scheduling

- Frame-rate independent metronome synchronization

- Real-time tempo adjustment with position continuity

2. Process-Isolated Batch Analysis

- Independent subprocess execution preventing UI freezing

- JSON file-based IPC with atomic operations

- Persistent caching reducing computational overhead

3. Multi-Modal Rhythm Analysis

- 4-dimensional rhythm quantification framework

- Hybrid MIDI/audio analysis pipeline

- Pattern-based validation for robust detection

4. Clinical RAS Optimization

- Medical-grade timing accuracy for therapy applications

- Unified downbeat cueing eliminating pickup beat interference

- Direct cadence control (20-180 steps/min) with automatic tempo calculation

System Requirements & Dependencies

Core Dependencies

- PyQt5: Modern GUI framework with responsive layout management

- numpy/scipy: Numerical computation and signal processing

- mido: MIDI playback and event scheduler

- pretty_midi: MIDI file parsing and manipulation

- madmom: Deep learning-based music information retrieval

- librosa: Audio feature extraction and analysis

- matplotlib: Visualization for rhythm analysis results

- FluidSynth: Real-time MIDI synthesis engine

Development Status

Phase 2 Complete: Core playback engine, batch analysis, and rhythm analysis framework fully implemented. The system supports both real-time playback for therapy sessions and large-scale batch processing for research applications, with comprehensive caching and automation capabilities.

Future work: Enhance robustness. Improve stability of playback system, test margin cases, improve UI design.